Emissary: The effective AI-platform for model distillation

We had the pleasure of sitting down to speak with Tanmay Chopra, the CEO and founder of Emissary. In a world where GenAI could truly disrupt industries, Emissary enables companies to transition from AI ideas and prompts that impress in demos built in carefully curated environments to proprietary ROI-positive AI that meets customer needs and expectations and reaches the bar to productionisation. Emissary makes AI make sense. Tanmay considers himself a “frustration founder”, Emissary was born out of challenges that he saw firsthand as a machine learning engineer at Neeva and Tiktok and obstacles he sees the new generation of AI engineers face in bringing their products to market with an increasingly discerning customer base

Takeaway 1: Enterprises are still early in their adoption of AI

Adoption of AI is still in incredibly early days. Most enterprises are still in the strategy development stage, far away from making product or infrastructure purchases and the bulk of capital is being directed towards consulting firms and rapid experimentation tools. Some more regulated industries such as finance and insurance that have been using machine learning for decades still stick to decision trees and have not even forayed into using neural networks, since regulators require a higher degree of explainability. One of the most prolific use cases of conventional ML - neural networks, is in classifiers and recommenders - Netflix recommending movies or TikTok differentiating between safe and unsafe content. However LLMs are very powerful and unlock a series of new use cases in:

Content Compression -- Examples of this summarizing meeting notes or a doctor's visit notes, news articles. Generating tl;drs or review summaries.

Content Expansion -- Writing out an email given a topic, generating blogs, integrations to enterprise systems is important for context and RAG to prevent hallucination during expansion

Content Transformation - This could be going from text to SQL or text to JSON. A lot of value is also unlocked transforming unstructured data to structured data, given the wealth of unstructured data enterprises engage with and possess

Most Enterprises today are stuck in the strategizing or pilot phase. It's best not to force an AI strategy but to ask two simple questions

Retroactive → Do any of my problem types fit into the content summarization expansion or transformation buckets if so this is a good use of AI.

Proactive → Can I add any features upstream or Downstream using AI. Ideally, use-cases with greater uncertainty than conventional software can handle, but not high temporal dynamicity - that is, where the work consists of repeatable concepts and/or largely but not identically repeatable tasks.

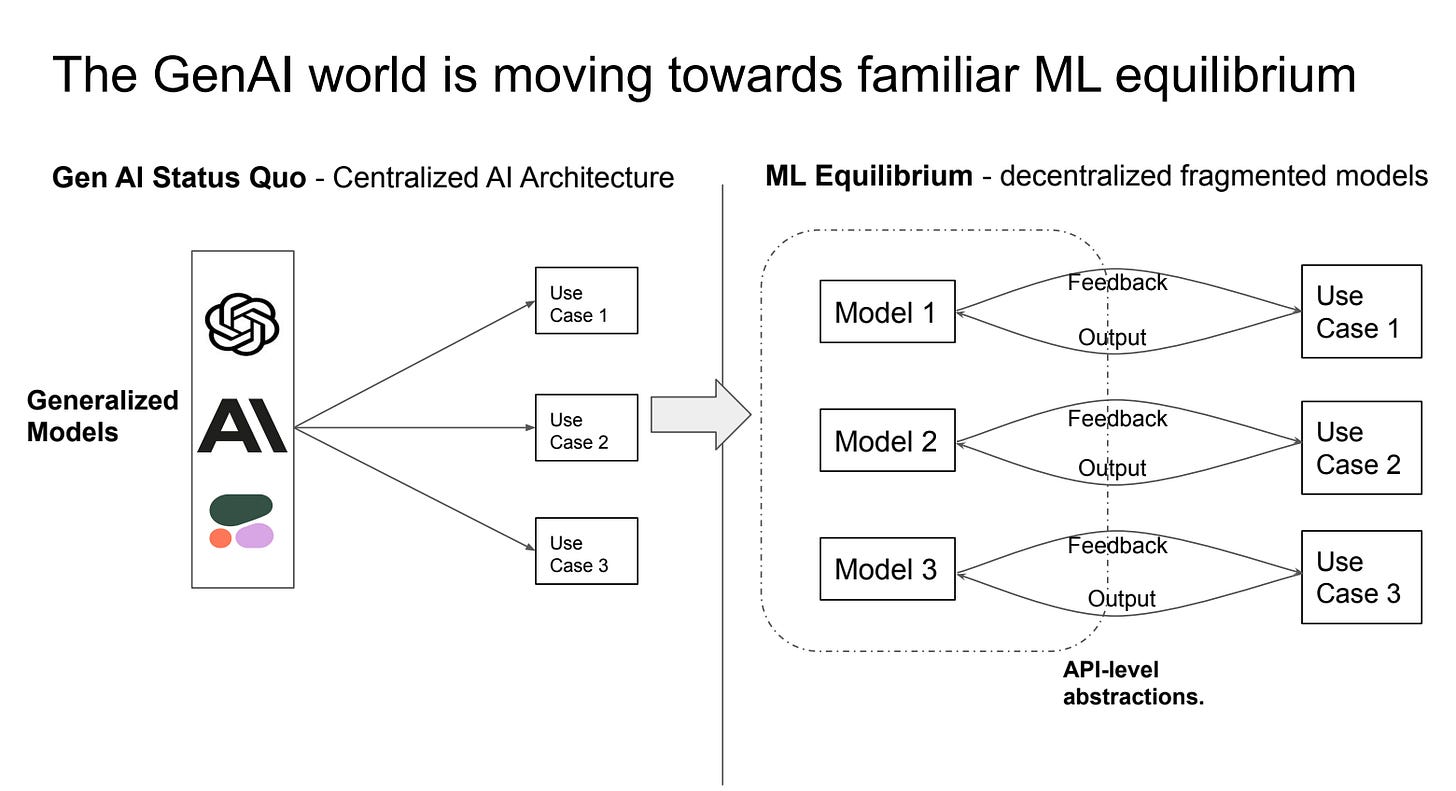

Takeaway 2: The AI equilibrium will not be one single LLM, but many smaller models

LLMs are a wonderful prototyping tool. They have also opened our imagination to the value that AI can add, but as companies move into the production stage they have to confront trade-offs with cost, reliability, quality, and latency. Consider the simple use case of meeting notes summarization, the same quality could be achieved with a smaller model that is a fraction of the size of ChatGPT, over time this will become the dominant choice If summarization is core to the business or the product. What LLMs are great at is solving the cold start problem: how do we get the first 10, 20, or 10,000 samples for summarization to build the smaller model. The Emissary framework enables the transition from larger to smaller models i.e. distillation. Emissary continuously monitors quality for a specific prompt with both large and small models, when the small model starts performing equivalently the large models are turned off. The future of AI likely looks for many smaller models performing discrete tasks and calling a larger model only when the precision and emergent properties are really needed.

“We really see the large language models as 3D printers. This is my favorite analogy. They’re a brilliant prototyping solution that allows a lot more people to see value in AI rapidly, that can then be captured through conventional means of scaled production (specialized models).”

If we don't end up in a world where companies have fleets of small models, the thing to think about is a lot of the value is just going to end up accruing with the chip (+ cloud) companies.

Takeaway 3: The only moat in machine learning is “Interaction Data”

Proprietary data is becoming increasingly valuable in the world of generative AI. However, even more valuable is interaction data. Interaction data, when treated and captured correctly, comprises a powerful human feedback loop that helps hyperalign a concept or task to the specific style of the enterprise or human. Emissary captures both of these data types and provides a framework for continuous model iteration. In today's paradigm, both different companies help manage data pipelines, data quality, and feedback. The platforms to build, fine-tune, and deploy models are separate, but LLMs have shown us the dependency that data and models have on each other cannot be uncoupled. Without this coupling, highly skilled manual labor is required at each stage, which could make it infeasible for enterprises to develop and manage meaningful AI capabilities economically. Emissary envisions being an end-to-end platform for AI and to merge machine learning and data infrastructure.

Special Announcement: Emissary launches Stupidly Simple Benchmarking Infrastructure

Emissary is releasing its benchmarking platform, that enables developers to evaluate prompts and manage model migrations and regressions on their own terms, rapidly and reliably. Generate and expand test sets, define custom metrics, and collaboratively rate outputs manually with your team, through automated LLM-assisted eval or outsource rating to Emissary - all in one place.